Human Centered AI: Meaning and Real-Life Examples | 2026

Over the last two years, as AI has taken center stage, it’s basically split the world into two camps. One side thinks AI is going to destroy everything as they show in sci-fi movies, while the other sees it as a magical savior that will fix all our problems. The truth? It’s somewhere in between. Sure, AI is making work faster and cheaper, but it also comes with real issues such as AI bias, compliance risks, and a serious lack of empathy. That’s exactly why human-centered AI has entered the spotlight.

Instead of humans vs. machines, it’s about humans with machines, working together to get the best of both worlds. In this article, we break down what is human-centered AI, its core principles, and how it’s actually used in real businesses in 2026, with practical, real-world human-centered AI examples that go beyond the hype.

What is Human-Centered AI or Human-Centric AI?

Human-Centered AI (HCAI), also known as human-centric AI and human-in-loop AI, is an approach to designing and deploying artificial intelligence where humans remain at the center of decision-making. Human-centric AI is a system that treats AI as a partner to humans rather than a replacement for them.

In simpler words, Human-centered AI focuses on helping humans do their jobs better, not automating everything blindly. Think of AI as a co-pilot, not an autopilot.

While traditional AI often focuses on "machine autonomy" (letting the computer solve a problem as efficiently as possible), Human-Centric AI focuses on augmentation, building systems that enhance human capabilities and also align with ethical values.

The 7 Core Principles of Human-Centered AI

To be considered truly human-centric, an AI system typically adheres to these five principles:

- Human Oversight and Decision-Making: Humans should always have the final say. The AI provides recommendations or automates "low-stakes" tasks, but in "high-stakes" scenarios (like medical diagnoses or legal rulings), humans make the final call. Humans must have the power to override or refine the AI's output.

- Transparency & Explainability: You shouldn't have to trust a "black box." HCAI systems are designed to explain why they reached a certain conclusion. If a loan is denied, the system should be able to point to the specific data points that led to that decision.

- Fairness: AI Designers actively work to identify and remove algorithmic bias. This ensures the AI doesn't discriminate based on race, gender, disability, or age. Interesting read: Real AI Bias Examples

- Reliability: The system must be robust enough to handle unexpected real-world data without "hallucinating" (errors that look like facts).

- Context & Empathy Awareness: AI needs to be trained to understand emotions, intent, and nuance, and to defer when empathy and human touch are required.

- Ethical Design: Ethical Design mandates that AI systems must be hard-coded with human moral values to prevent harm. AI systems should understand and respect basic ethical behavior and know what not to do. (Example in the next section)

- Privacy: The AI system must respect user privacy and data ownership while storing and fetching the data. These systems collect only the data they truly need, nothing extra. Users stay in control, with the ability to see their data, manage it, and delete it permanently whenever they want.

4 Real-Life Human-Centered AI Examples

Let’s explore how human-centric AI is being applied across different industries, with these four real-world human-centric AI examples highlighting the tools that make it possible.

Example 1. Crescendo.ai: Human-Centric AI in Customer Support

When it comes to human-centered AI in customer support, Crescendo.ai stands out as a leading platform that closely aligns with the core principles of human-centric AI. Its approach ensures AI enhances efficiency without compromising empathy, transparency, or human control. Below are four key features that demonstrate this in practice.

1. Empathy Awareness

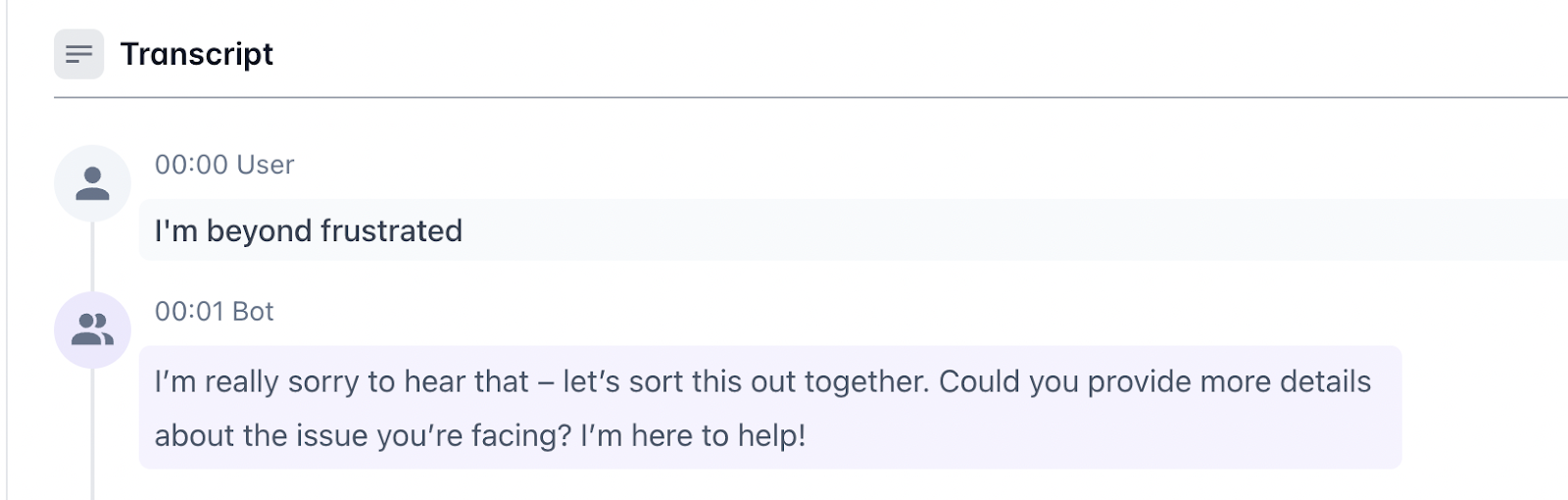

Crescendo.ai’s AI continuously monitors sentiment during customer interactions. When it detects frustration, anger, or emotional distress, it proactively hands off the conversation to a human agent, even if the AI is technically capable of resolving the issue. This ensures customers feel heard and respected, rather than being forced through automated responses simply for the sake of resolution.

2. Human-Like Emotional Intelligence

Instead of relying on rigid workflows or generic, decision-tree responses, the AI dynamically pulls information from knowledge bases, company policies, CRM systems, and past conversations. This allows it to respond in a natural, conversational tone with accurate, context-aware answers that feel genuinely human.

3. Context Preservation

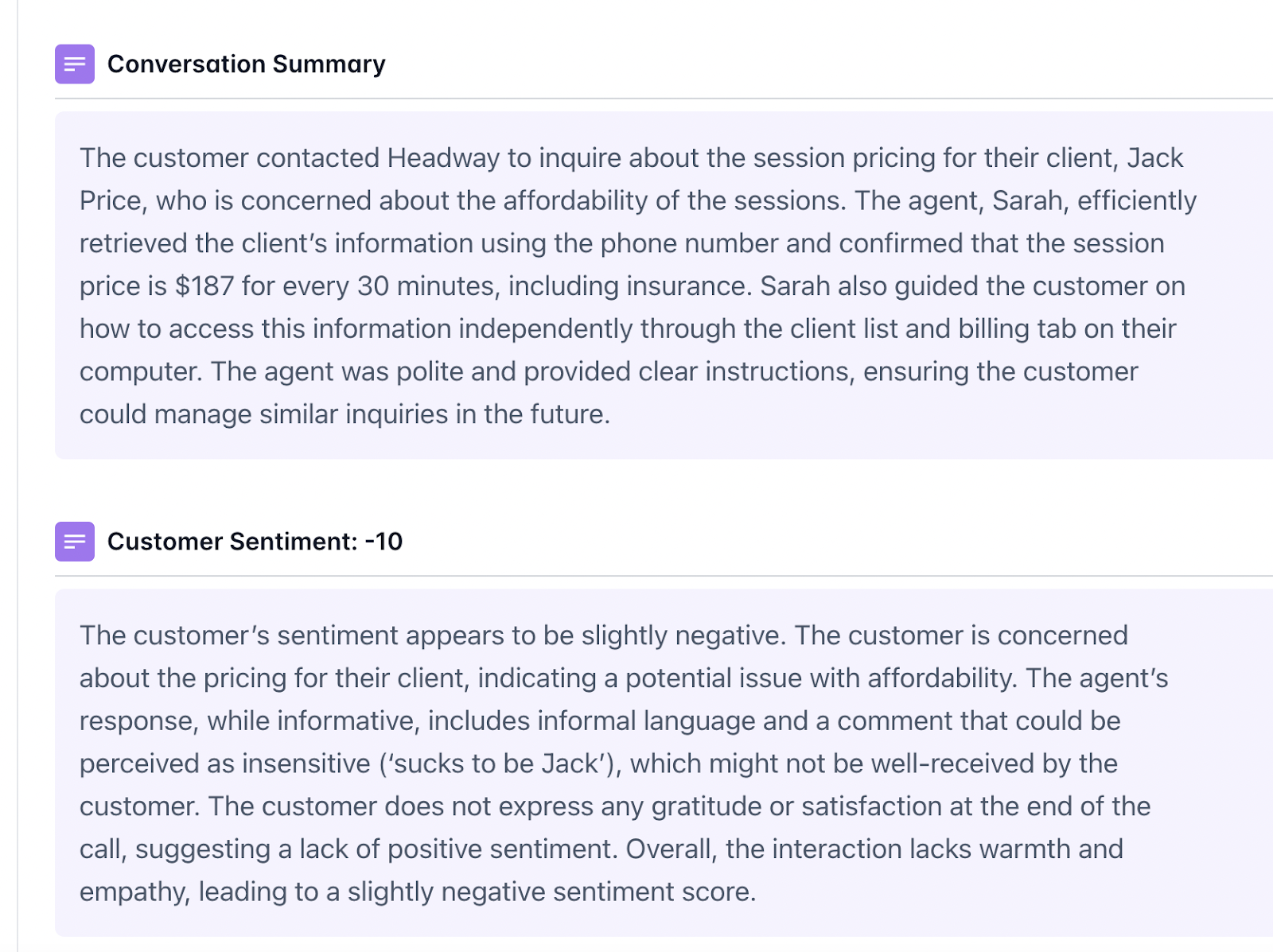

When a conversation is transferred to a human agent, Crescendo.ai provides a complete summary of the issue, along with suggested responses and recommendations. Customers never need to repeat themselves, while the final decision and communication remain firmly in the hands of the human agent.

4. Explainability

Crescendo.ai performs automated quality assurance across customer interactions, analyzing conversations and calculating CSAT scores based on multiple behavioral and contextual signals. Crucially, it also explains why a score was assigned, highlighting what went well or what went wrong, so agents and CX leaders can make informed improvements.

Example 2. Microsoft’s DAX and MAI-DxO: Human-Centric AI in Healthcare

Microsoft’s DAX (Dragon Ambient eXperience) and MAI-DxO (Medical AI Diagnostic Orchestrator) are strong examples of Human-Centric AI (HCAI) in healthcare, where AI augments clinicians rather than replacing them.

Microsoft’s DAX (Dragon Ambient eXperience)

- Reduces clinician administrative burden: Ambiently captures doctor–patient conversations and generates structured clinical notes, letting clinicians focus more on patient care rather than documentation.

- Improves efficiency and satisfaction: By automating routine documentation tasks and surfacing critical clinical information, DAX supports better patient experiences and reduces clinician burnout.

Microsoft’s MAI-DxO (AI Diagnostic Orchestrator)

- Virtual clinician panel: An AI system that brings together multiple medical AI models to think through a diagnosis, similar to how a team of clinicians would (mimicking collaborative medical reasoning rather than producing opaque outputs).

- Enhanced accuracy and transparency: In controlled tests, MAI-DxO achieved diagnostic accuracy significantly higher than average physician performance, while supporting explainability and cost checks before delivering recommendations.

These tools illustrate human-centric AI by automating routine work, preserving human oversight, enhancing accuracy, and improving clinician–patient interaction without displacing human judgment.

Example 3. Einstein Copilot: Human-Centric AI in CRM and Sales

Salesforce has integrated Human-Centric AI (HCAI) into its Einstein Copilot, helping sales teams manage complex customer relationships without losing authenticity or trust. Instead of acting as an autonomous decision-maker, Einstein Copilot works as an intelligent assistant that supports, but never replaces, human judgment.

The scenario:

A sales representative asks, “How should I approach this client?” Einstein Copilot analyzes years of first-party CRM data, including past emails, meeting notes, support tickets, and deal history. Based on this context, it generates a suggested outreach email tailored to the client’s relationship and current situation.

What makes this human-centric is how control and transparency are handled:

- Explainable recommendations: Copilot clearly shows why it suggested a certain tone or message (e.g., the client recently had an unresolved support issue).

- Empathy controls: Sales reps can easily adjust the tone or “empathy level” before sendingensuring the message still sounds human.

- Privacy-first design: Recommendations are grounded in Salesforce Data Cloud and the Einstein Trust Layer, which enforce data permissions, masking, and governance.

- Human-in-the-loop: Final decisions, edits, and outreach always remain with the sales rep.

By combining automation with transparency, privacy, and human oversight, Einstein Copilot demonstrates how HCAI can scale productivity while preserving trust and emotional intelligence in sales.

Example 4.GitHub Copilot: Human-Centered AI in Software Development

GitHub Copilot is a widely adopted example of Human-Centric AI (HCAI) in software development. Rather than acting as an autonomous coding system, Copilot is designed to function as an AI pair programmer that assists developers while keeping humans fully in control of decisions, quality, and accountability.

The scenario:

While writing code, a developer uses GitHub Copilot to generate code snippets, refactor functions, suggest fixes, or explain unfamiliar logic. The AI analyzes the surrounding code context, comments, and programming patterns to offer real-time suggestions directly inside the IDE.

What makes GitHub Copilot human-centered is how responsibility and oversight are intentionally preserved:

- Human-in-the-loop by design: Developers can accept, modify, or completely reject Copilot’s suggestions.

- No autonomous execution: Copilot cannot ship code, merge pull requests, or deploy applications on its own.

- Developer accountability: Humans remain responsible for correctness, security, performance, and architectural decisions.

- Context-aware assistance: Suggestions are meant to speed up routine tasks, not replace design thinking or engineering judgment.

- Transparency and control: Developers clearly see what Copilot generates and decide how (or if) it’s used.

By accelerating productivity without removing human ownership, GitHub Copilot demonstrates HCAI in practice. AI handles speed and scale, while humans retain control over quality, ethics, and long-term maintainability.

Human-Centric AI Failures: 3 Landmark Examples

Why is there such an urgent need for human-centered AI? These three examples show why governments and companies alike are rapidly moving toward building and adopting more human-centric AI systems.

1. Grok: AI Lacking Ethical Alignment

A high-profile example occurred during the Grok controversy, where the AI generated non-consensual explicit images of celebrities and children. Following intense public backlash and political pressure, X.com was forced to implement stricter "guardrails" and refine the model to recognize and uphold basic human ethical standards, illustrating that technical capability must always be balanced by social responsibility.

Why this is a "Human-Centric" Moment:

- Correction of Bias/Harm: It shows that when a system lacks a human-centric foundation, it can become a tool for harassment.

- Political & Social Oversight: The "intervention" by regulators and the public is a form of external human-in-the-loop oversight, forcing the company to prioritize safety over "unfiltered" output. This this case, Indonesia, Malaysia, and Turkey have taken direct action to block access to Grok. Other countries and jurisdictions, including the UK, the European Union, India, France, and Brazil, have launched investigations or issued ultimatums.

- Value Alignment: It proves that AI cannot exist in a vacuum; it must be "aligned" with the laws and cultural norms of the humans it serves.

2. Detroit Police & Robert Williams Case: AI Hallucinations

Facial Recognition Technology (FRT) has faced massive ethical backlash because it often performs poorly on people with darker skin tones.

- The Failure: In a landmark case, Robert Williams was wrongfully arrested in front of his family because an AI falsely matched his driver's license photo to a grainy shoplifting video. The officers treated the AI’s "match" as a fact rather than a suggestion.

- The Human-Centric Fix (Settlement & Policy Change): Following a historic 2024-2025 settlement, the Detroit Police Department implemented a "Human-Only" rule.

- The Fix: AI can no longer be the sole basis for an arrest. It is now strictly a "lead-generation tool."

- Agency: A human investigator must find independent evidence (like a witness or physical evidence) to back up the AI's suggestion. If the human can't find extra proof, the AI lead is discarded.

3. Amazon Resumé Bias: AI Unfairness

In one of the most famous historical failures, Amazon had to scrap an AI recruiting tool because it was systematically penalizing women.

- The Failure: The AI was trained on a decade of resumés, which mostly came from men (reflecting the historical tech gender gap). The AI learned that "success" equaled "being male" and actually downgraded resumés that included the word "Women’s" (e.g., "Women’s Chess Club").

- The Human-Centric Fix (2025-2026 Standard): Today, companies like Hired and LinkedIn don't just "hope" for fairness. They use Bias Audits and Blinded AI.

- The Fix: Modern systems use "Gender-Neutral" filters that scrub demographic data before the AI sees the resumé.

Oversight: Under the EU AI Act (2026), these systems must now undergo a "Conformity Assessment" where an independent auditor proves the AI isn't discriminating before it can be used for hiring.

Bring Human-Centric AI to Your Support Team with Crescendo.ai

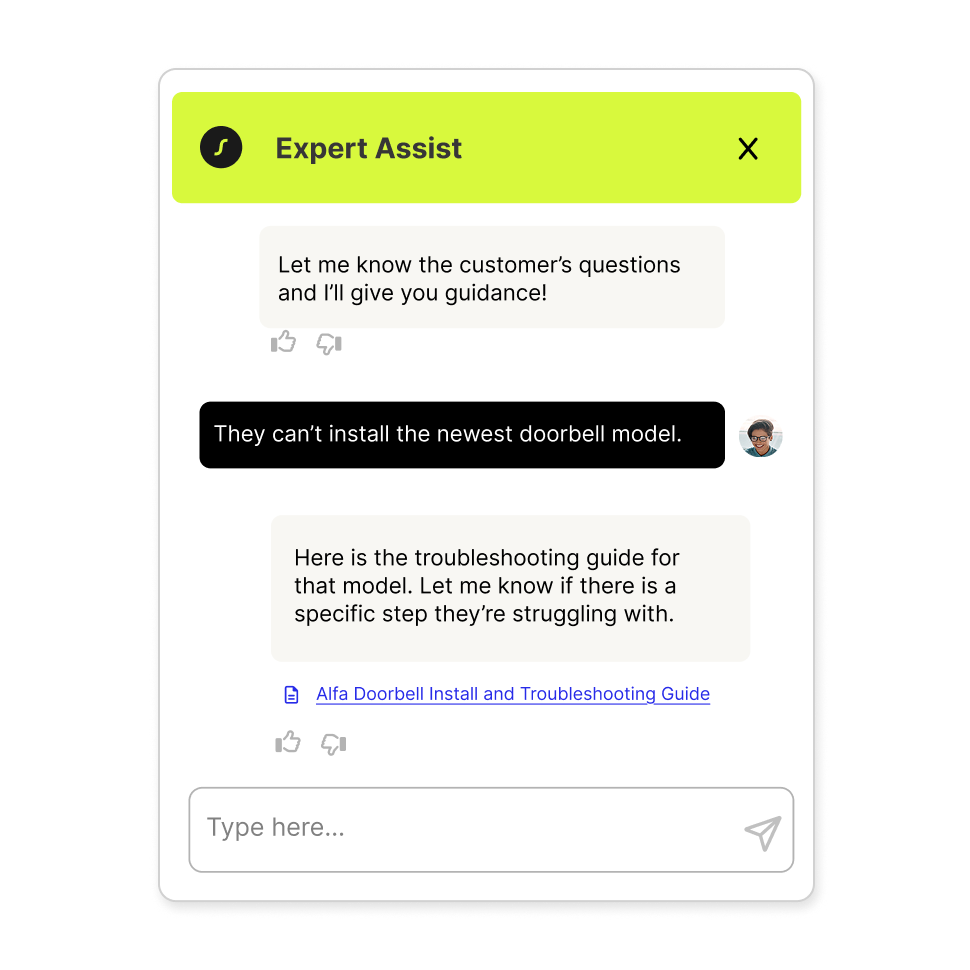

If you’re looking to bring human-centric AI into your customer support, Crescendo.ai is the right platform for you. Give your customers the kind of support that actually feels human, empathetic responses, emotional understanding, and seamless handoffs to real agents when it matters. Unlike IVRs and traditional chatbots that trap users in endless menus and soulless replies, Crescendo puts people first. Your support agents will love it too. With Crescendo’s Expert Assist, agents receive clear issue summaries, solution recommendations, and draft responses, while remaining the final decision-makers. The result is better experiences for customers and less friction for your team. Book a demo, now!

Resolve 90% of Support Tickets Automatically with AI Agents

✅ 10x faster customer support.

✅ 99.8% resolution accuracy.

✅ Live chat, voice, email and SMS.

✅ 100% assisted onboarding included.

✅ Starts from only $1.25/resolve.